October 5, 2025

Database migration is far more than just moving data from point A to point B; it's a high-stakes technical operation where success is measured by uptime, data integrity, and post-migration performance. A minor oversight can quickly cascade into catastrophic data loss, extended application downtime, and significant financial repercussions. In a process where precision is paramount, relying on a haphazard approach is not an option. A well-defined strategy is the only reliable path to a successful outcome.

This guide cuts through the noise to deliver a curated list of the most critical database migration best practices, honed from real-world successes and failures. To truly achieve a flawless strategy, it is beneficial to consult widely recognized guidelines, such as these 8 Essential Data Migration Best Practices. Our article expands on this foundation with advanced techniques.

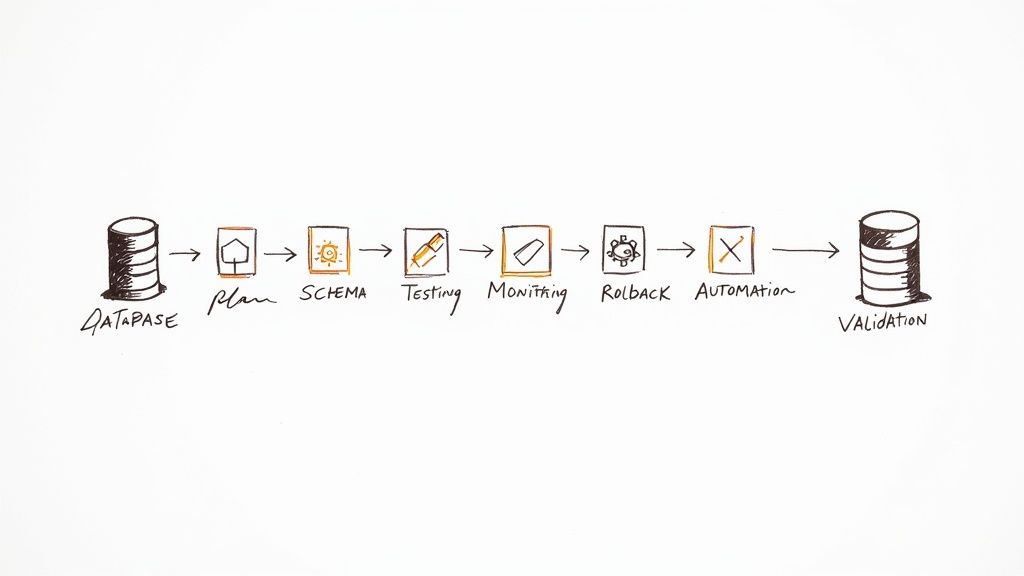

Whether you're moving to the cloud, modernizing a legacy system, or consolidating disparate databases, the seven strategies outlined here provide a definitive roadmap. You will learn how to navigate technical complexities, avoid common pitfalls, and execute a migration that not only works but enhances your entire data infrastructure for the future. Let's dive into the practices that separate a seamless transition from a costly, disruptive disaster.

The foundation of any successful database migration is a meticulously detailed pre-migration assessment and planning phase. This practice is far more than a simple checklist; it's a deep-dive investigation into your entire data ecosystem. It involves a thorough evaluation of the current database environment, including its architecture, data dependencies, application integrations, and specific business requirements before a single line of code is written or a single byte of data is moved.

This initial stage sets the trajectory for the entire project. Neglecting it often leads to scope creep, unexpected downtime, data loss, and budget overruns. A comprehensive plan ensures all stakeholders are aligned and potential roadblocks are identified and mitigated early. This is a critical component of any effective list of database migration best practices.

A robust assessment prevents surprises. By cataloging all database objects (tables, views, stored procedures), understanding complex data relationships, and defining performance benchmarks, you create a detailed migration roadmap. This blueprint includes clear timelines, resource allocation, and measurable success criteria, turning a potentially chaotic process into a structured, manageable project.

For example, when Capital One migrated over a thousand applications to the cloud, their extensive assessment phase was crucial for understanding the intricate dependencies between systems, allowing them to sequence the migration logically and minimize business disruption.

To execute a successful pre-migration assessment, consider the following steps:

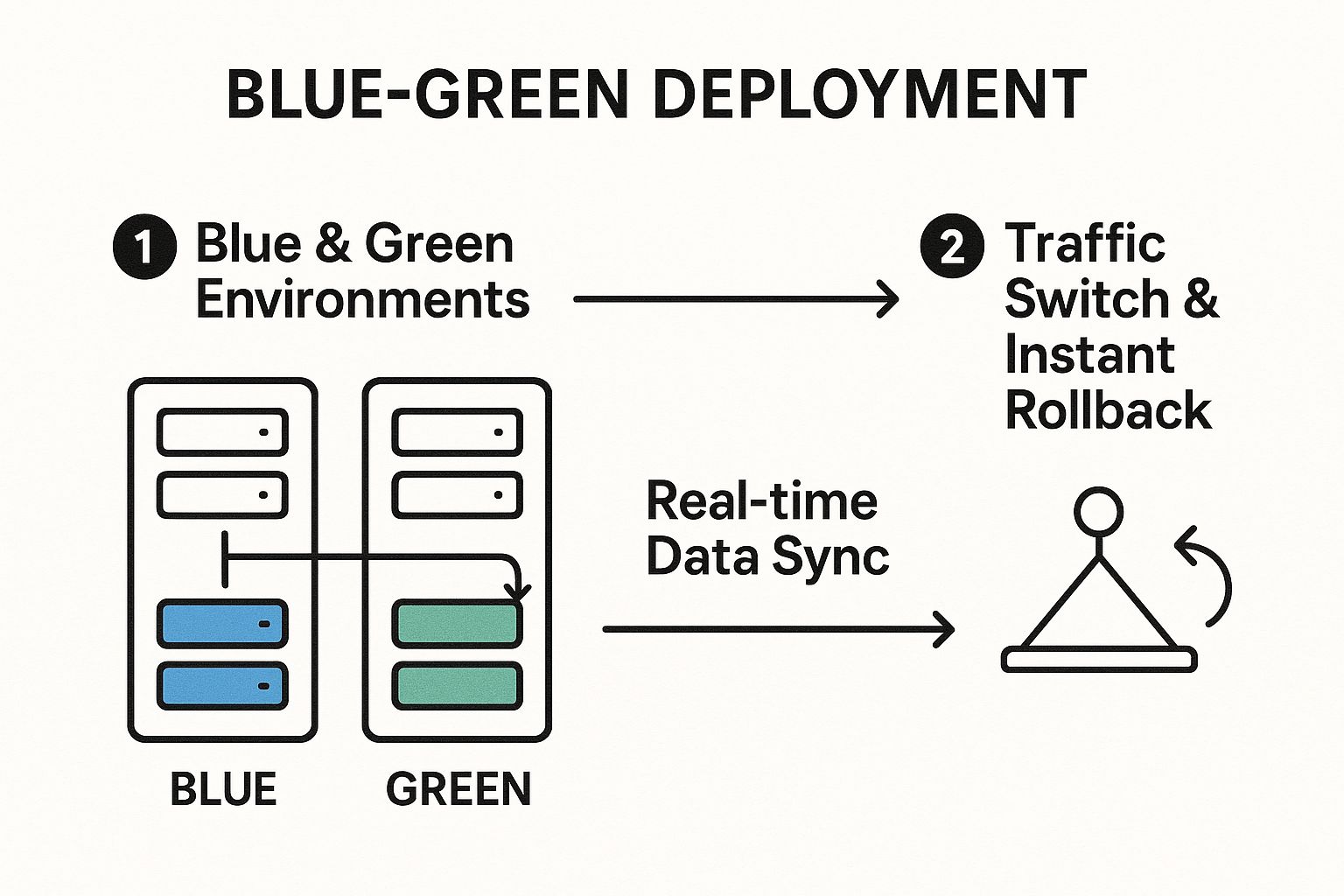

For mission-critical systems where continuous availability is paramount, a zero-downtime migration strategy is not just a goal; it's a requirement. The blue-green deployment model offers a powerful and elegant solution. This approach involves maintaining two identical, parallel production environments, conventionally named "blue" and "green." One environment (e.g., blue) handles live user traffic while the other (green) is idle and serves as the migration target.

The core of this strategy lies in its ability to de-risk the cutover process. You perform the database migration on the idle green environment, validate it exhaustively, and only then switch the router to direct live traffic to the newly updated green environment. The old blue environment is kept on standby, ready for an immediate rollback if any issues arise. This methodology is a cornerstone of modern database migration best practices for high-availability systems.

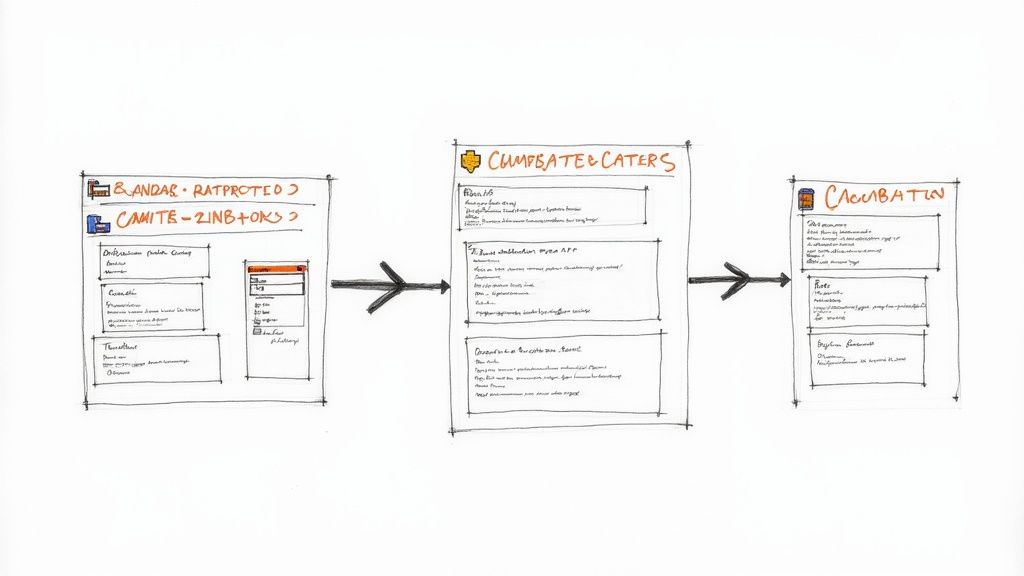

The following infographic illustrates the fundamental workflow of a blue-green database migration, showing how traffic is managed between the two identical environments.

This process flow highlights the critical steps: maintaining real-time data synchronization between environments and executing a clean, reversible traffic switch, which together eliminate user-facing downtime.

A blue-green approach transforms a high-stakes, big-bang cutover into a controlled, low-risk event. It provides a safety net that traditional migration methods lack, allowing you to validate the new database in a production-identical setting without affecting live users. This eliminates the need for lengthy maintenance windows and provides near-instantaneous rollback capabilities, drastically reducing business risk.

For example, Spotify famously used this technique when migrating its user profile data from a sharded PostgreSQL setup to Cassandra. By synchronizing data to the new Cassandra-based "green" environment and slowly routing traffic, they were able to perform the massive migration without any noticeable service disruption to their millions of users.

To execute a successful blue-green database migration, consider the following steps:

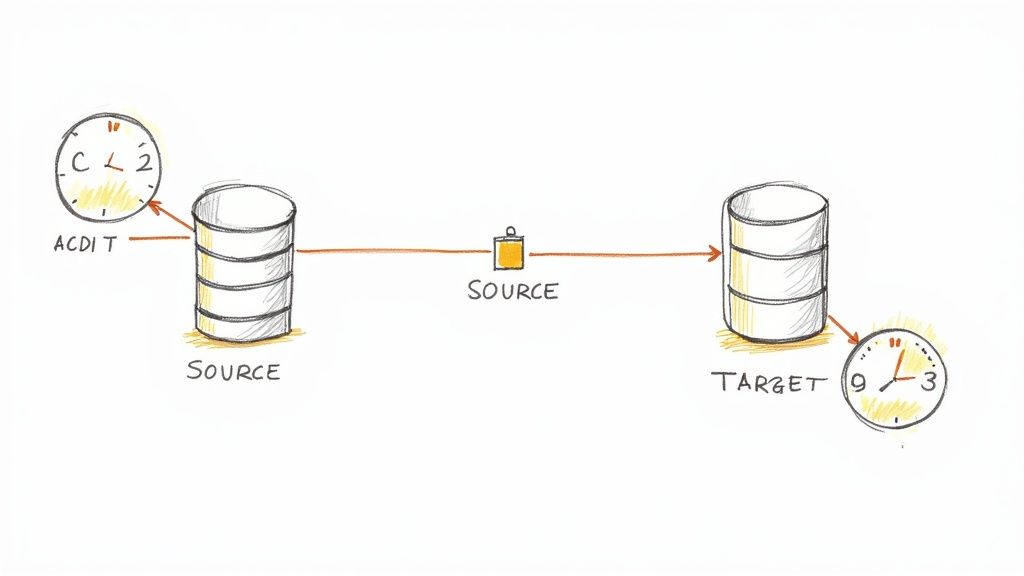

For large-scale databases where extended downtime is unacceptable, a full-scale, "big bang" migration is often impractical. This is where incremental data migration using Change Data Capture (CDC) becomes an invaluable strategy. CDC is a technique that identifies, captures, and delivers the changes made to database tables in real-time, allowing for continuous synchronization from the source to the target system throughout the migration process.

Instead of a single, massive data transfer, CDC enables a phased approach. An initial full load of the data is performed, after which only the incremental changes (inserts, updates, and deletes) are replicated. This method drastically minimizes the final cutover window, reduces risk, and ensures data consistency between the old and new systems. Embracing this technique is a cornerstone of modern database migration best practices.

CDC minimizes downtime to near-zero levels, which is critical for 24/7 business operations. This approach allows the source database to remain fully operational while the migration is in progress, preventing revenue loss and customer disruption. By synchronizing data continuously, it provides multiple opportunities for verification and testing against live data streams, ensuring a much smoother and more reliable final cutover.

For instance, Uber successfully migrated its core trip data from PostgreSQL to its own schemaless database using a CDC-based system built around tools like Debezium. This allowed the company to move petabytes of data without interrupting its real-time ride-hailing services, a feat impossible with traditional migration methods.

To effectively implement an incremental migration with CDC, follow these steps:

Ensuring data integrity is the ultimate measure of a migration's success. This is where comprehensive data validation and quality assurance come into play. This practice involves a systematic, multi-stage process of verifying data accuracy, completeness, and consistency before, during, and after the migration. It's not just about checking if the data arrived; it's about confirming the data is correct, usable, and retains its business value in the new environment.

Without rigorous validation, you risk "garbage in, garbage out" on a massive scale, leading to corrupted reports, application failures, and a total loss of user trust. This meticulous checking process is a cornerstone of modern database migration best practices, transforming a high-risk data move into a verifiable success.

Data validation provides the empirical proof that the migration was successful. By establishing quality benchmarks pre-migration and comparing them against post-migration results, you can quantitatively measure success. This process catches subtle but critical errors like data truncation, incorrect data type conversions, or broken relationships that a simple row count would miss.

For instance, when ING Bank undertook a massive core banking system migration, their success hinged on comprehensive data quality checks at every stage. This ensured that sensitive financial records and customer data remained 100% accurate, preventing catastrophic business and regulatory consequences.

To implement a robust data validation strategy, focus on these key actions:

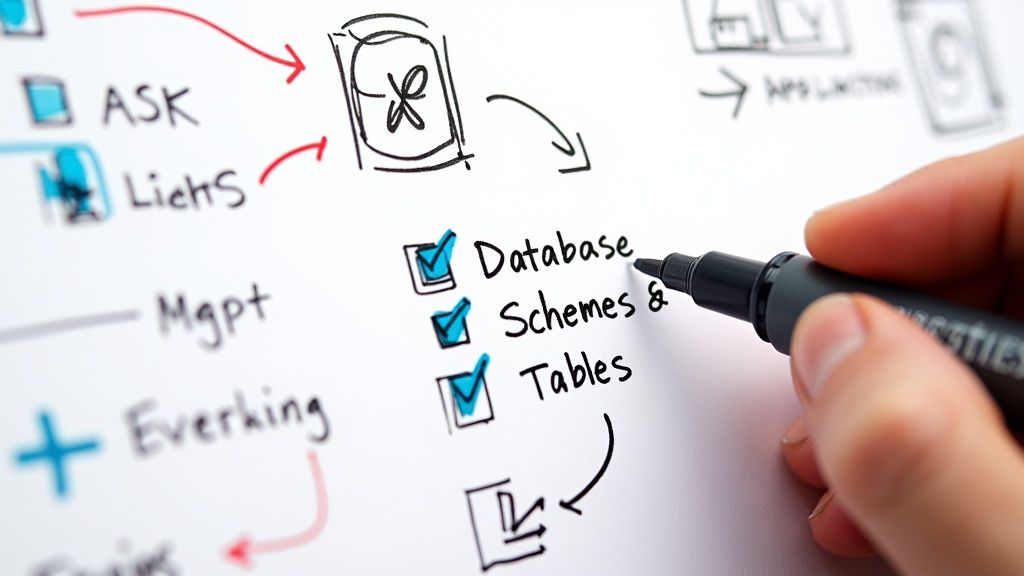

Treating your database schema as code is a transformative practice that brings predictability and reliability to your migration process. This approach, known as schema evolution and version control, involves systematically managing and tracking all changes to your database structure using the same principles applied to application source code. It ensures that every modification is scripted, versioned, and reproducible across all environments, from local development to production.

By moving away from manual SQL changes executed directly on the database, you eliminate a significant source of human error. This methodology provides a clear, auditable history of your database's structure, which is invaluable for debugging, collaboration, and maintaining data integrity. Implementing this is a cornerstone of modern database migration best practices, enabling agile development cycles and safer deployments.

A version-controlled schema prevents environmental drift, where development, staging, and production databases fall out of sync. This consistency is crucial for reliable testing and predictable deployments. When a schema change is needed, it’s not an ad-hoc command but a committed migration script that can be peer-reviewed, tested in an automated pipeline, and applied consistently everywhere.

For example, Atlassian leverages Flyway to manage database versioning for its complex products like JIRA and Confluence. This allows their distributed development teams to collaborate effectively on schema changes, ensuring that the database state is always consistent with the application code version being deployed, which drastically reduces deployment failures.

To effectively implement schema version control, integrate these practices into your workflow:

A migration can be technically successful but still be a business failure if the new database performs poorly. Performance testing and optimization is the practice of rigorously evaluating the target database's speed, scalability, and responsiveness under realistic load conditions. This involves more than just checking if data arrived intact; it’s about ensuring the new system can handle production workloads efficiently and meet or exceed user expectations.

This phase validates the chosen architecture, infrastructure, and query performance before the final cutover, preventing post-launch slowdowns that can erode user trust and impact revenue. By simulating real-world usage patterns, teams can proactively identify and fix bottlenecks, from inefficient queries to under-provisioned hardware. This proactive approach is a cornerstone of any list of database migration best practices.

Failing to test for performance is a high-stakes gamble. A new database environment might react differently to queries or concurrent connections, and assumptions about performance rarely hold true. Robust testing provides empirical data to confirm the new system is ready for prime time, ensuring a smooth transition for end-users and avoiding emergency "fire-fighting" immediately after go-live.

For instance, when Twitter engineers migrated core services to a new database infrastructure, they conducted massive-scale performance testing to ensure the system could handle over 400,000 tweets per second. This rigorous validation was essential to maintain platform stability and user experience during one of the most complex migrations in modern tech history.

To execute effective performance testing and optimization, consider the following steps:

Even with the most meticulous planning, unforeseen issues can arise during a database migration. A robust rollback strategy is your safety net, providing a clear, pre-defined path to revert to the original system if critical failures occur. This practice involves creating and validating a plan to undo the migration, ensuring that business operations can be restored quickly with minimal data loss or disruption.

Failing to prepare for a potential rollback transforms a recoverable problem into a catastrophic event. A well-documented contingency plan provides the confidence to proceed with the migration, knowing there is a controlled way to retreat if necessary. This preparation is a cornerstone of professional database migration best practices, distinguishing a calculated risk from a reckless gamble.

A rollback plan is a form of specialized insurance against project failure. It defines the specific triggers for initiating a rollback, such as data corruption, unacceptable performance degradation, or critical application failures. Having this procedure in place prevents panicked, ad-hoc decision-making in a high-stress situation, which can often worsen the problem. A robust rollback strategy is essentially a specific form of contingency plan, aligning closely with principles outlined in a comprehensive modern IT disaster recovery plan.

For example, when TSB Bank in the UK attempted a major IT migration, the lack of a viable rollback plan contributed to a prolonged outage that locked millions of customers out of their accounts, costing the company hundreds of millions of pounds and causing severe reputational damage.

To develop a reliable rollback and contingency plan, consider these steps:

Navigating a database migration is a defining challenge for any organization. It's far more than a technical exercise in moving data from point A to point B. As we've explored, a successful migration is a strategic initiative built on a foundation of meticulous planning, innovative execution, and robust validation. From the initial comprehensive assessment to establishing a rock-solid rollback plan, each step is critical to ensuring business continuity, data integrity, and system performance. Mastering these database migration best practices is not just about avoiding disaster; it's about unlocking the full potential of your new data infrastructure.

The journey through best practices like zero-downtime blue-green deployments, incremental migration with Change Data Capture (CDC), and rigorous performance testing highlights a central theme: proactive control. A reactive approach invites unacceptable risks, including extended downtime, data corruption, and a degraded user experience. By contrast, a proactive strategy empowers your team to anticipate challenges, validate every byte of data, and execute the switchover with confidence and precision.

To distill our deep dive into actionable insights, remember these core principles:

Ultimately, a database migration is about future-proofing your organization. It's about building a scalable, agile, and resilient foundation that can support growth and innovation. For member-based organizations, professional associations, and event organizers, this technical foundation directly impacts your ability to serve your community. A successful migration enables faster access to information, more reliable services, and a better overall member experience.

This strategic alignment of technology and community is crucial. When your data is unified and accessible, you gain deeper insights into member engagement, can personalize communications, and streamline operations. The principles of a great migration, planning, validation, and seamless execution, mirror the principles of great community management. Both require a clear strategy and the right tools to bring that strategy to life, ensuring every decision is data-informed and member-focused. Adopting these database migration best practices is your first step toward building a more connected and data-driven organization.

Is your fragmented tech stack holding your community back? A successful data migration is only half the battle. GroupOS provides a unified platform to manage your members, events, and content, complete with seamless member data migration services to ensure a smooth transition. Schedule a demo today to see how you can consolidate your tools and build a stronger, more engaged community.